This project is created in p5.js and you can view the desktop version right now.

I’m still ironing out some glitches in the mobile version but you can see the latest mobile version here.

This project is created in p5.js and you can view the desktop version right now.

I’m still ironing out some glitches in the mobile version but you can see the latest mobile version here.

Other than a handful of birds of prey we have the best eyesight in the animal kingdom. It’s our sight which is largely what we have evolved around. Even if we’re not specifying prey on the savannah with sight and spear, we’re discerning in an instant a box of Coco Pops from a box of Weetabix in the supermarket — sight plays a big part in our life experience.

But what about sound? Does this take a back seat to sight, or is it just as important in sculpting our world? Or is it precisely the fact that our eyesight is our dominant strength that our sense of sound supplies a largely unconscious understanding of the world; acting intuitively; vital but unnoticed.

This project asks, what if we illuminated sound, gave it texture or motion, colour or form? Would the priority change? Would we ‘see’ things differently? For the visually impaired, this is already a truth.

The purpose of this project is to take a deep look at the role sound plays in ordinary lives by showcasing it with focus.

Here’s the journey thus far…

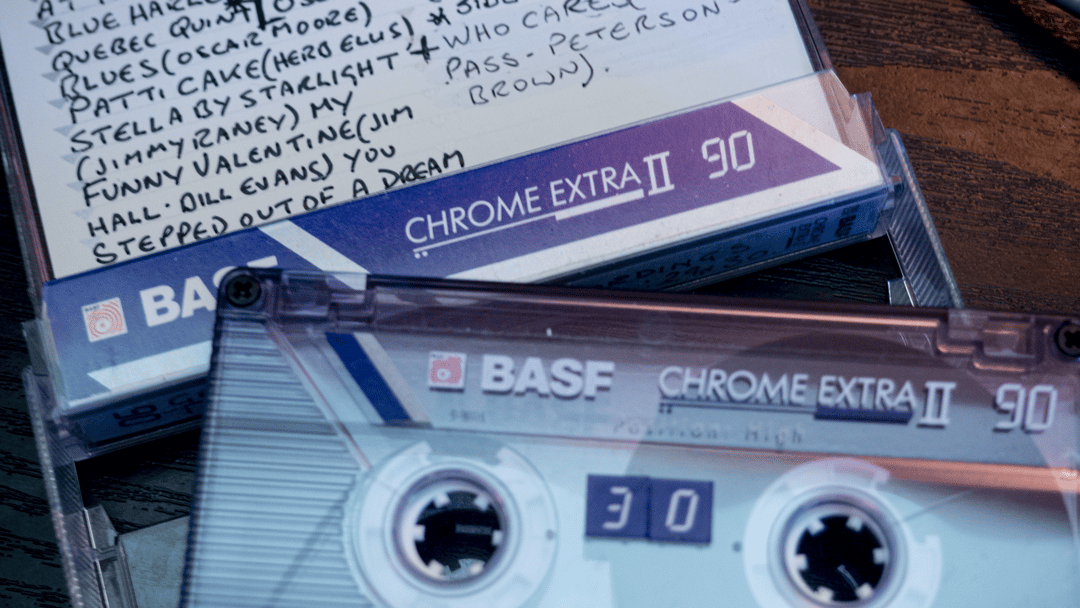

I began with this cassette, a cassette that was originally a gift from my grandfather.

The idea was originally brought about by a story analogous to the gift. After a life spent in London Jazz clubs – singing in some of them, my grandfather was a true fan of the craft. With the move into age and slowly diminishing hearing, he sought to systematically go through his entire record collection, listening to the best of the best before his hearing went entirely and so the world of music eventually lost.

It’s this story that the cassette tape has become emblematic of and this story that ended up being the inspiration for the project, which was, how music informs our world experience, and how music tells us who we are.

I went about this trying to make this idea literal, having music and sound feedback a real visual manifestation of the world but blended with music to essentially manipulate our understanding of what the world “is”.

The evolution of this project took many directions before settling on the final direction.

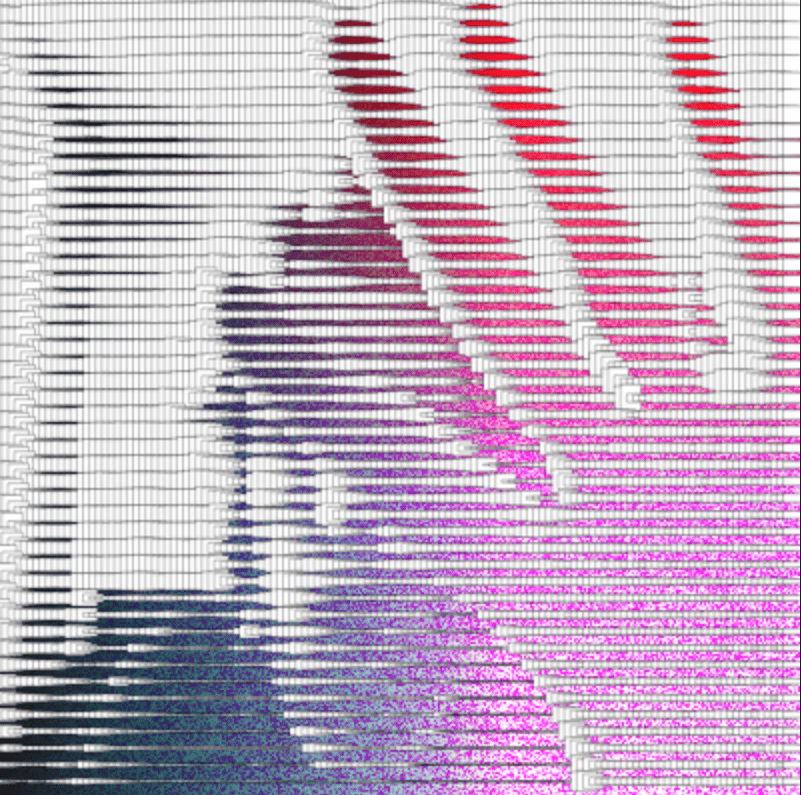

Beginning with coding a camera pixel generator – converting live video feed into a low-resolution line of rectangles and then coding sound input to manipulate the height of the pixels evermore – first with sound level input and then with EQ response for a more expressive visualisation of sound.